- Container Networking Is Simple!

- What Actually Happens When You Publish a Container Port

- How To Publish a Port of a Running Container

- Multiple Containers, Same Port, no Reverse Proxy...

- Service Proxy, Pod, Sidecar, oh my!

- Service Discovery in Kubernetes: Combining the Best of Two Worlds

- Traefik: canary deployments with weighted load balancing

Don't miss new posts in the series! Subscribe to the blog updates and get deep technical write-ups on Cloud Native topics direct into your inbox.

The only "official" way to publish a port in Docker is the -p|--publish flag of the docker run (or docker create) command. And it's probably for good that Docker doesn't allow you to expose ports on the fly easily. Published ports are part of the container's configuration, and the modern infrastructure is supposed to be fully declarative and reproducible. Thus, if Docker encouraged (any) modification of the container's configuration at runtime, it'd definitely worsen the general reproducibility of container setups.

But what if I really need to publish that port?

For instance, I periodically get into the following trouble: there is a containerized Java monster web service that takes (tens of) minutes to start up, and I'm supposed to develop/debug it. I launch a container and go grab some coffee. But when I'm back from the coffee break, I realize that I forgot to expose port 80 (or 443, or whatever) to my host system. And the browser is on the host...

There are two (quite old) StackOverflow answers (1, 2) suggesting a bunch of solutions:

- Restart the container exposing the port, potentially committing its modified filesystem in between. This is probably "the right way," but it sounds too slow

and boringfor me. - Modify the container's config file manually and restart the whole Docker daemon for the changes to be picked up. This solution likely causes the container's restart too, so it's also too slow for me. But also, I doubt it's future-proof even though it's kept being suggested 9 years later.

- Access the port using the container's IP address like

curl 172.17.0.3:80. This is a reasonable suggestion, but it works only when that container IP is routable from the place where you have your debugging tools. Docker Desktop (or Docker Engine running inside of a vagrant VM) makes it virtually useless. - Add a DNAT iptables rule to map the container's socket to the host's. That's what Docker Engine itself would do had you asked it to publish the port in the first place. But are you an iptables expert? Because I'm not. And also, it has the same issue as the above piece of advice - the container's IP address has to be routable from the host system.

- Start another "proxy" container in the same network and publish its port instead - finally, a solution that sounds good to me ❤️🔥 Let's explore it.

Tiny bit of theory

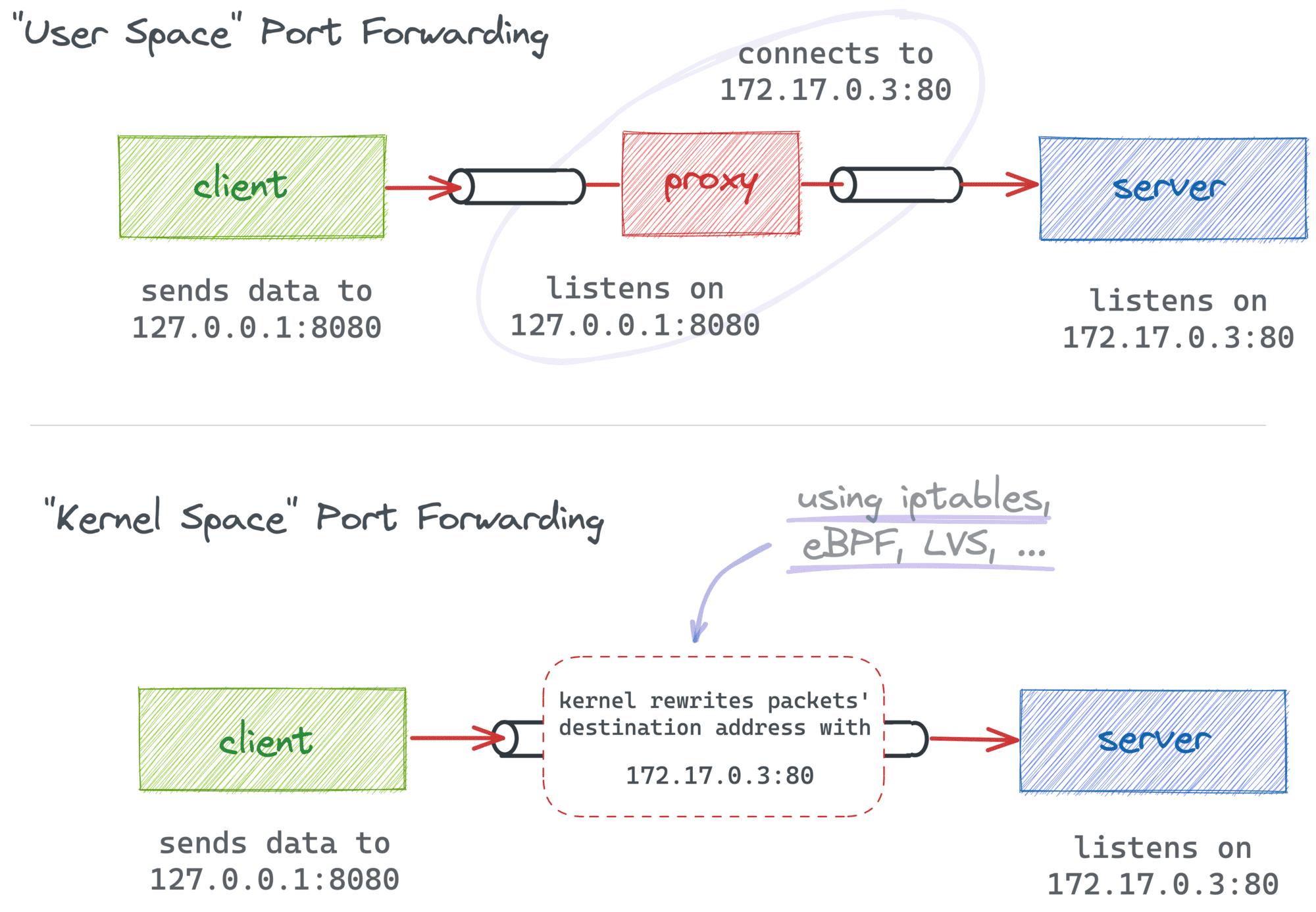

Container port publishing is a form of port forwarding, i.e., good old socket address redirection. It can be implemented with either modification of the packet's destination address (on L3) or by using an intermediary proxy process forwarding only the payload data:

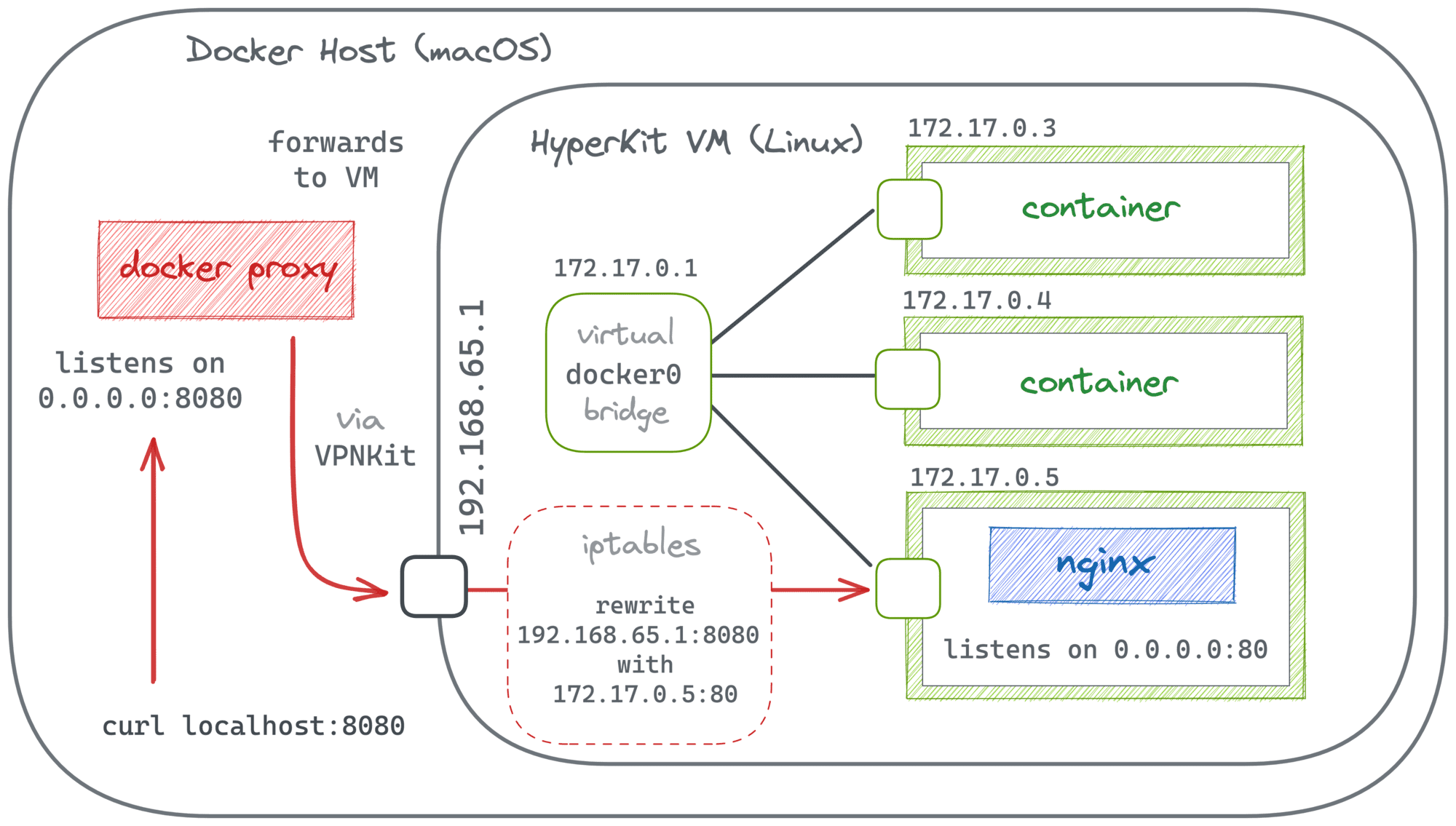

In the case of Docker port publishing, it's actually a combination of both approaches. Traditionally, Docker Engine relies on in-kernel packet modification (with iptables rules), while Docker Desktop adds a higher-level proxy on top of that, making the implementation work on non-Linux systems too:

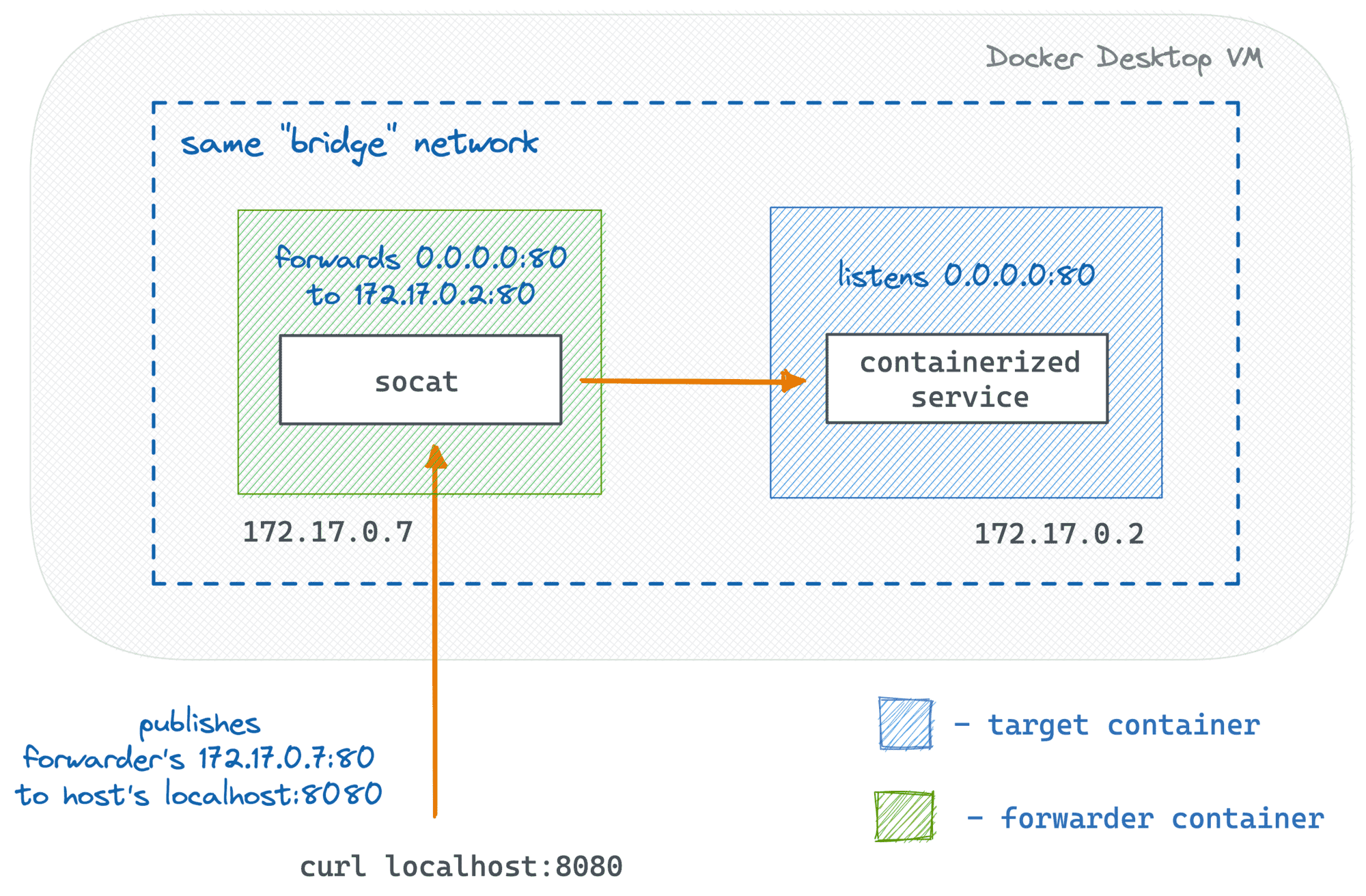

Last but not least, for that "proxy" solution to work, we should be able to start a new container that can talk to the target container (using its IP address). But this is generally not a problem when both containers reside in the same Docker network.

The solution

The trick is to start a new auxiliary container attached to the same network the target container resides in and ask Docker to publish one (or many) of its ports. It does not establish immediate connectivity with the target's port. However, we can create it by launching a tiny proxy process inside of the container we just launched that will be forwarding not network packets but the actual data:

Let's try to reproduce this experiment. First, we'll need to start the target container. For simplicity, I'll use nginx (it listens on 0.0.0.0:80 inside of the container):

$ TARGET_PORT=80

$ HOST_PORT=8080

$ docker run -d --name target nginx

Now, we need to get the target's IP address and the network name:

$ TARGET_IP=$(

docker inspect \

-f '{{range.NetworkSettings.Networks}}{{.IPAddress}}{{end}}' \

target

)

$ NETWORK=$(

docker container inspect \

-f '{{range $net,$v := .NetworkSettings.Networks}}{{printf "%s" $net}}{{end}}' \

target

)

Finally, let's start the forwarder container with a tiny socat program inside:

$ docker run -d \

--publish ${HOST_PORT}:${TARGET_PORT} \

--network ${NETWORK} \

--name forwarder nixery.dev/socat \

socat TCP-LISTEN:${TARGET_PORT},fork TCP-CONNECT:${TARGET_IP}:${TARGET_PORT}

And it works!

$ curl localhost:${HOST_PORT}

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

...

Notice that the forwarder's port 80 is now published to the host's port 8080, so you can access it as usual, including opening localhost:8080 in your favorite browser. The socat program will take care of the rest. It listens on the forwarder's port 80 and transfers any incoming data to the (unexposed to the host) target's port 80 using its IP address. So, from the outside, it looks like the target's port is actually published to the host.

For me, this solution has two significant advantages:

- It doesn't require a restart of the target container, so it can be fast(er).

- It relies only on the standard means: the ability to publish a port of a (not started yet) container and the ability of containers residing in the same network to intercommunicate.

It means the solution will work in Docker Engine, Docker Desktop, and even with Docker Compose.

The Docker Compose compatibility is actually very handy. Often, in a multi-service setup, you'll have only the "public" services' port mapped. So, next time you'll need to access a helper service like a database from the host system, feel free to apply this trick and map those ports without restarting the whole compose file.

Automating the solution

The downside of the solution is that it requires a few extra steps. You need to determine the target's IP and network name, come up with a simple but correct socat program, launch the forwarder container, etc.

At the same time, when automated, the solution can be really fast and handy. And it's generic enough to be used with other container runtimes too, including containerd and Kubernetes. So, I ended up writing a special port-forward command for my experimental container debugging tool:

cdebug port-forward <target> -L 8080:80

- Container Networking Is Simple!

- What Actually Happens When You Publish a Container Port

- How To Publish a Port of a Running Container

- Multiple Containers, Same Port, no Reverse Proxy...

- Service Proxy, Pod, Sidecar, oh my!

- Service Discovery in Kubernetes: Combining the Best of Two Worlds

- Traefik: canary deployments with weighted load balancing

Don't miss new posts in the series! Subscribe to the blog updates and get deep technical write-ups on Cloud Native topics direct into your inbox.