- Not Every Container Has an Operating System Inside

- You Don't Need an Image To Run a Container

- You Need Containers To Build Images

- Containers Aren't Linux Processes

- From Docker Container to Bootable Linux Disk Image

Don't miss new posts in the series! Subscribe to the blog updates and get deep technical write-ups on Cloud Native topics direct into your inbox.

You need containers to build images. Yes, you've heard it right. Not another way around.

For people who found their way to containers through Docker (well, most of us I believe) it may seem like images are of somewhat primary nature. We've been taught to start from a Dockerfile, build an image using that file, and only then run a container from that image. Alternatively, we could run a container specifying an image from a registry, yet the main idea remains - an image comes first, and only then the container.

But what if I tell you that the actual workflow is reverse? Even when you are building your very first image using Docker, podman, or buildah, you are already, albeit implicitly, running containers under the hood!

How container images are created

⚠️ As Jerry Young rightfully pointed out in the comment, the below experiment can be reproduced only with the good old docker build command. If the newer BuildKit engine is used, the temporary containers won't show up in the docker stats output. However, I'm pretty sure that even with BuildKit containers remain needed to build images.

Let's avoid any unfoundedness and take a closer look at the image building procedure. The easiest way to spot this behavior is to build a simple image using the following Dockerfile:

FROM debian:latest

RUN sleep 2 && apt-get update

RUN sleep 2 && apt-get install -y uwsgi

RUN sleep 2 && apt-get install -y python3

COPY some_file /

While building the image, try running docker stats -a in another terminal:

Huh, we haven't been launching any containers ourselves, nevertheless, docker stats shows that there were 3 of them 🙈 But how come?

Simplifying a bit, images can be seen as archives with a filesystem inside. Additionally, they may also contain some configurational data like a default command to be executed when a container starts, exposed ports, etc, but we will be mostly focusing on the filesystem part. Luckily, we already know, that technically images aren't required to run containers. Unlike virtual machines, containers are just isolated and restricted processes on your Linux host. They do form an isolated execution environment, including the personalized root filesystem, but the bare minimum to start a container is just a folder with a single executable file inside. So, when we are starting a container from an image, the image gets unpacked and its content is provided to the container runtime in a form of a filesystem bundle, i.e. a regular directory containing the future root filesystem files and some configs (all those layers you may have started thinking about are abstracted away by a union mount driver like overlay fs). Thus, if you don't have the image but you do need the alpine Linux distro as the execution environment, you always can grab Alpine's rootfs (2.6 MB) and put it to a regular folder on your disk, then mix in your application files, feed it to the container runtime and call it a day.

However, to unleash the full power of containers, we need handy image building facilities. Historically, Dockerfiles have been serving this purpose. Any Dockerfile must have the FROM instruction at the very beginning. This instruction specifies the base image while the rest of the Dockerfile describes the difference between the base and the derived (i.e. current) images.

The most basic container image is a so-called scratch image. It corresponds to an empty folder and the FROM scratch instruction in a Dockerfile means noop.

Now, let's take a look at the beloved alpine image:

# https://github.com/alpinelinux/docker-alpine/blob/v3.11/x86_64/Dockerfile

FROM scratch

ADD alpine-minirootfs-3.11.6-x86_64.tar.gz /

CMD ["/bin/sh"]

I.e. to make the Alpine Linux distro image we just need to copy its root filesystem to an empty folder (scratch image) and that's it! Well, I bet Dockerfiles you've seen so far a rarely that trivial. More often than not, we need to utilize distro's facilities to prepare the file system of the future container and one of the most common examples is probably when we need to pre-install some external packages using yum, apt, or apk:

FROM debian:latest

RUN apt-get install -y ca-certificates

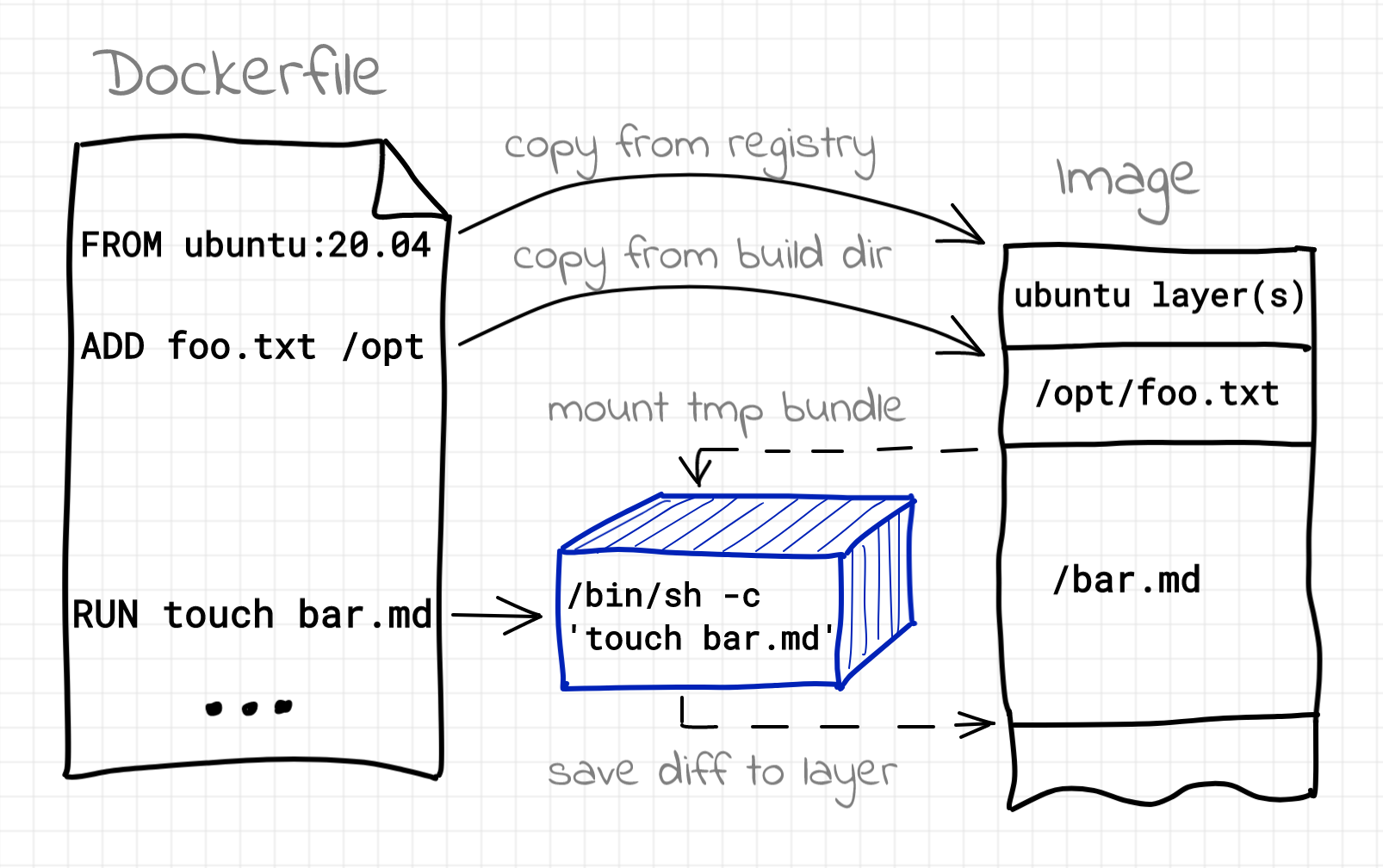

But how can we have apt running if we are building this image, say, on a Fedora host? Containers to the rescue! Every time, Docker (or buildah, or podman, etc) encounters a RUN instruction in the Dockerfile it actually fires a new container! The bundle for this container is formed by the base image plus all the changes made by preceding instructions from the Dockerfile (if any). When the execution of the RUN step completes, all the changes made to the container's filesystem (so-called diff) become a new layer in the image being built and the process repeats starting from the next Dockerfile instruction.

Building image using Dockerfile

Getting back to the original example from the beginning of this article, a mind-reader could have noticed that the number of containers we've seen in the second terminal corresponded exactly to the number of RUN instructions in the Dockerfile. For people with a solid understanding of the internal kitchen of containers it may sound obvious. However, for the rest of us possessing rather hands-on containers experience, the Dockerfile-based (and immensely popularized) workflow may instead obscure some things.

Luckily, even though Dockerfiles are a de facto standard to describe images, it's not the only existing way. Thus, when using Docker, we can commit any running container to produce a new image. All the changes made to the container's filesystem by any command run inside of it since its start will form the topmost layer of the image created by the commit, while the base will be taken from the image used to create the said container. Although, if we decide to use this method we may face some reproducibility problems.

How to build image without Dockerfile

Interesting enough, that some of the novel image building tools consider Dockerfile not as an advantage, but as a limitation. For instance, buildah promotes an alternative command-line image building procedure:

# Start building an image FROM fedora

$ buildah from fedora

> Getting image source signatures

> Copying blob 4c69497db035 done

> Copying config adfbfa4a11 done

> Writing manifest to image destination

> Storing signatures

> fedora-working-container # <-- name of the newly started container!

# Examine running containers

$ buildah ps

> CONTAINER ID BUILDER IMAGE ID IMAGE NAME CONTAINER NAME

> 2aa8fb539d69 * adfbfa4a115a docker.io/library/fedora:latest fedora-working-container

# Same as using ENV instruction in Dockerfile

$ buildah config --env MY_VAR="foobar" fedora-working-container

# Same as RUN in Dockerfile

$ buildah run fedora-working-container -- yum install python3

> ... installing packages

# Finally, make a layer (or an image)

$ buildah commit fedora-working-container

We can choose between interactive command-line image building or putting all these instructions to a shell script, but regardless of the actual choice, buildah's approach makes the need for running builder containers obvious. Skipping the rant about the pros and cons of this building technique, I just want to notice that the nature of the image building might have been much more apparent if buildah's approach would predate Dockerfiles.

Finalizing, let's take a brief look at two other prominent tools - Google's kaniko and Uber's makisu. They try to tackle the image building problem from a slightly different angle. These tools don't really run containers while building images. Instead, they directly modify the local filesystem while following image building instructions 🤯 I.e. if you accidentally start such a tool on your laptop, it's highly likely that your host OS will be wiped and replaced with the rootfs of the image. So, beware. Apparently, these tools are supposed to be executed fully inside of an already existing container. This solves some security problems bypassing the need for elevating privileges of the builder process. Nevertheless, while the technique itself is very different from the traditional Docker's or buildah's approaches, the containers are still there. The main difference is that they have been moved out of the scope of the build tool.

Instead of conclusion

The concept of container images turned out to be very handy. The layered image structure in conjunction with union mounts like overlay fs made the storage and usage of images immensely efficient. The declarative Dockerfile-based approach enabled the reproducible and cachable building of artifacts. This allowed the idea of container images to become so wide-spread that sometime it may seem like it's an inalienable and archetypal part of the containerverse. However, as we saw in the article, from the implementation standpoint, containers are independent of images. Instead, most of the time we need containers to build images, not vice-a-verse.

Appendix: Image Building Tools

- Not Every Container Has an Operating System Inside

- You Don't Need an Image To Run a Container

- You Need Containers To Build Images

- Containers Aren't Linux Processes

- From Docker Container to Bootable Linux Disk Image

Don't miss new posts in the series! Subscribe to the blog updates and get deep technical write-ups on Cloud Native topics direct into your inbox.