- Docker: How To Debug Distroless And Slim Containers

- Kubernetes Ephemeral Containers and kubectl debug Command

- Containers 101: attach vs. exec - what's the difference?

- Why and How to Use containerd From Command Line

- Docker: How To Extract Image Filesystem Without Running Any Containers

- KiND - How I Wasted a Day Loading Local Docker Images

Don't miss new posts in the series! Subscribe to the blog updates and get deep technical write-ups on Cloud Native topics direct into your inbox.

You can find a Russian translation of this article here.

Last week at KubeCon, there was a talk about Kubernetes ephemeral containers. The room was super full - some people were even standing by the doors trying to sneak in. "This must be something really great!" - thought I and decided to finally give Kubernetes ephemeral containers a try.

So, below are my findings - traditionally sprinkled with a bit of containerization theory and practice 🤓

TL;DR: Ephemeral containers are indeed great and much needed. The fastest way to get started is the kubectl debug command. However, this command might be tricky to use if you're not container-savvy.

The Need For Ephemeral Containers

How to debug Kubernetes workloads?

Baking a full-blown Linux userland and debugging tools into production container images makes them inefficient and increases the attack surface. Copying debugging tools into running containers on-demand with kubectl cp is cumbersome and not always possible (it requires a tar executable in the target container). But even when the debugging tools are available in the container, kubectl exec can be of little help if this container is in a crash loop.

So, what other debugging options do we have given the immutability of the Pod's spec?

There is, of course, debugging right from a cluster node, but SSH access to the cluster might be off-limits for many of us. Well, probably there is not so many options left...

Unless we can relax a bit the Pod immutability requirement!

What if new (somewhat limited) containers could be added to an already running Pod without restarting it? Since Pods are just groups of semi-fused containers and the isolation between containers in a Pod is weakened, such a new container could be used to inspect the other containers in the (acting up) Pod regardless of their state and content.

And that's how we get to the idea of an Ephemeral Container - "a special type of container that runs temporarily in an existing Pod to accomplish user-initiated actions such as troubleshooting."

Isn't making Pods mutable against the Kubernetes declarative nature? 🤔

What prevents you from starting to (ab)use ephemeral containers for running production workloads? Besides common sense, the following limitations:

- They lack guarantees for resources or execution.

- They can use only already allocated to the Pod resources.

- They cannot be restarted, and no ports can be exposed.

- No liveness/readiness/etc. probes can be configured.

So, nothing technically prevents you from, say, performing one-off tasks on behalf of a running Pod by means of ephemeral containers (please don't quote me on that if you decide to try it in prod), but they will be unlikely usable for something more durable.

Now, when the theory is clear, it's time for a little practice!

The Mighty kubectl debug Command

Kubernetes adds support for ephemeral containers by extending the Pod spec with the new attribute called (surprise, surprise!) ephemeralContainers. This attribute holds a list of Container-like objects, and it's one of the few Pod spec attributes that can be modified for an already created Pod instance.

More details on the new Ephemeral Containers API 💡

The ephemeralContainers list can be appended by PATCH-ing a new dedicated subresource /pods/NAME/ephemeralcontainers. This can be done only once the Pod has been created.

Trying to create a Pod with a non-empty ephemeral containers list will fail with the following error:

The Pod is invalid: spec.ephemeralContainers: Forbidden: cannot be set on create.

Interesting that kubectl edit also cannot be used to add ephemeral containers, even on the fly:

Pod is invalid: spec: Forbidden: pod updates may not change fields other than `spec.containers[*].image`, `spec.initContainers[*].image`, `spec.activeDeadlineSeconds`, `spec.tolerations` (only additions to existing tolerations) or `spec.terminationGracePeriodSeconds` (allow it to be set to 1 if it was previously negative)

A container spec added to the ephemeralContainers list cannot be modified and remains in the list even after the corresponding container terminates.

Adding elements to the ephemeralContainers list makes new containers (try to) start in the existing Pod. The EphemeralContainer spec has a substantial number of properties to tweak. In particular, much like regular containers, ephemeral containers can be interactive and PTY-controlled, so that the subsequent kubectl attach -it execution would provide you with a familiar shell-like experience.

However, the majority of the Kubernetes users won't need to deal with the ephemeral containers API directly. Thanks to the powerful kubectl debug command that tries to abstract ephemeral containers behind a higher-level debugging workflow. Let's see how kubectl debug can be used!

Kubernetes 1.23+ playground cluster is required 🛠️

For my playgrounds, I prefer disposable virtual machines. Here is a Vagrantfile to quickly spin up a Debian box with Docker on it. Drop it to a new folder, and then run vagrant up; vagrant ssh from there.

Vagrant.configure("2") do |config|

config.vm.box = "debian/bullseye64"

config.vm.provider "virtualbox" do |vb|

vb.cpus = 4

vb.memory = "4096"

end

config.vm.provision "shell", inline: <<-SHELL

apt-get update

apt-get install -y curl git cmake vim

SHELL

config.vm.provision "docker"

end

Once the machine is up, you can use arkade to finish the set up in no time:

$ curl -sLS https://get.arkade.dev | sudo sh

$ arkade get kind kubectl

# Make sure it's Kubernetes 1.23+

$ kind create cluster

Using kubectl debug - first (and unfortunate) try

For a guinea-pig workload I'll use a basic Deployment with two distroless containers (Python for the main app and Node.js for a sidecar):

$ kubectl apply -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: slim

spec:

selector:

matchLabels:

app: slim

template:

metadata:

labels:

app: slim

spec:

containers:

- name: app

image: gcr.io/distroless/python3-debian11

command:

- python

- -m

- http.server

- '8080'

- name: sidecar

image: gcr.io/distroless/nodejs-debian11

command:

- /nodejs/bin/node

- -e

- 'setTimeout(() => console.log("done"), 999999)'

EOF

# Save the Pod name for future reference:

$ POD_NAME=$(kubectl get pods -l app=slim -o jsonpath='{.items[0].metadata.name}')

If, or rather when, such a Deployment starts misbehaving, there will be very little help from the kubectl exec command since distroless images usually lack even the basic exploration tools.

Proof for the above Deployment 🤓

# app container - no `bash`

$ kubectl exec -it -c app ${POD_NAME} -- bash

error: exec: "bash": executable file not found in $PATH: unknown

# ...and very limited `sh` (actually, it's aliased `dash`)

$ kubectl exec -it -c app ${POD_NAME} -- sh

$# ls

sh: 1: ls: not found

# sidecar container - no shell at all

$ kubectl exec -it -c sidecar ${POD_NAME} -- bash

error: exec: "bash": executable file not found in $PATH: unknown

$ kubectl exec -it -c sidecar ${POD_NAME} -- sh

error: exec: "sh": executable file not found in $PATH: unknown

So, let's try inspecting Pods using an ephemeral container:

$ kubectl debug -it --attach=false -c debugger --image=busybox ${POD_NAME}

The above command adds to the target Pod a new ephemeral container called debugger that uses the busybox:latest image. I intentionally created it interactive (-i) and PTY-controlled (-t) so that attaching to it later would provide a typical interactive shell experience.

Here is the impact of the above command on the Pod spec:

$ kubectl get pod ${POD_NAME} \

-o jsonpath='{.spec.ephemeralContainers}' \

| python3 -m json.tool

[

{

"image": "busybox",

"imagePullPolicy": "Always",

"name": "debugger",

"resources": {},

"stdin": true,

"terminationMessagePath": "/dev/termination-log",

"terminationMessagePolicy": "File",

"tty": true

}

]

...and on its status:

$ kubectl get pod ${POD_NAME} \

-o jsonpath='{.status.ephemeralContainerStatuses}' \

| python3 -m json.tool

[

{

"containerID": "containerd://049d76...",

"image": "docker.io/library/busybox:latest",

"imageID": "docker.io/library/busybox@sha256:ebadf8...",

"lastState": {},

"name": "debugger",

"ready": false,

"restartCount": 0,

"state": {

👉 "running": {

"startedAt": "2022-05-29T13:41:04Z"

}

}

}

]

If the ephemeral container is running, we can try attaching to it:

$ kubectl attach -it -c debugger ${POD_NAME}

# Trying to access the `app` container (a python webserver).

$# wget -O - localhost:8080

Connecting to localhost:8080 (127.0.0.1:8080)

writing to stdout

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01//EN" "http://www.w3.org/TR/html4/strict.dtd">

<html>

...

</html>

At first sight, worked like a charm!

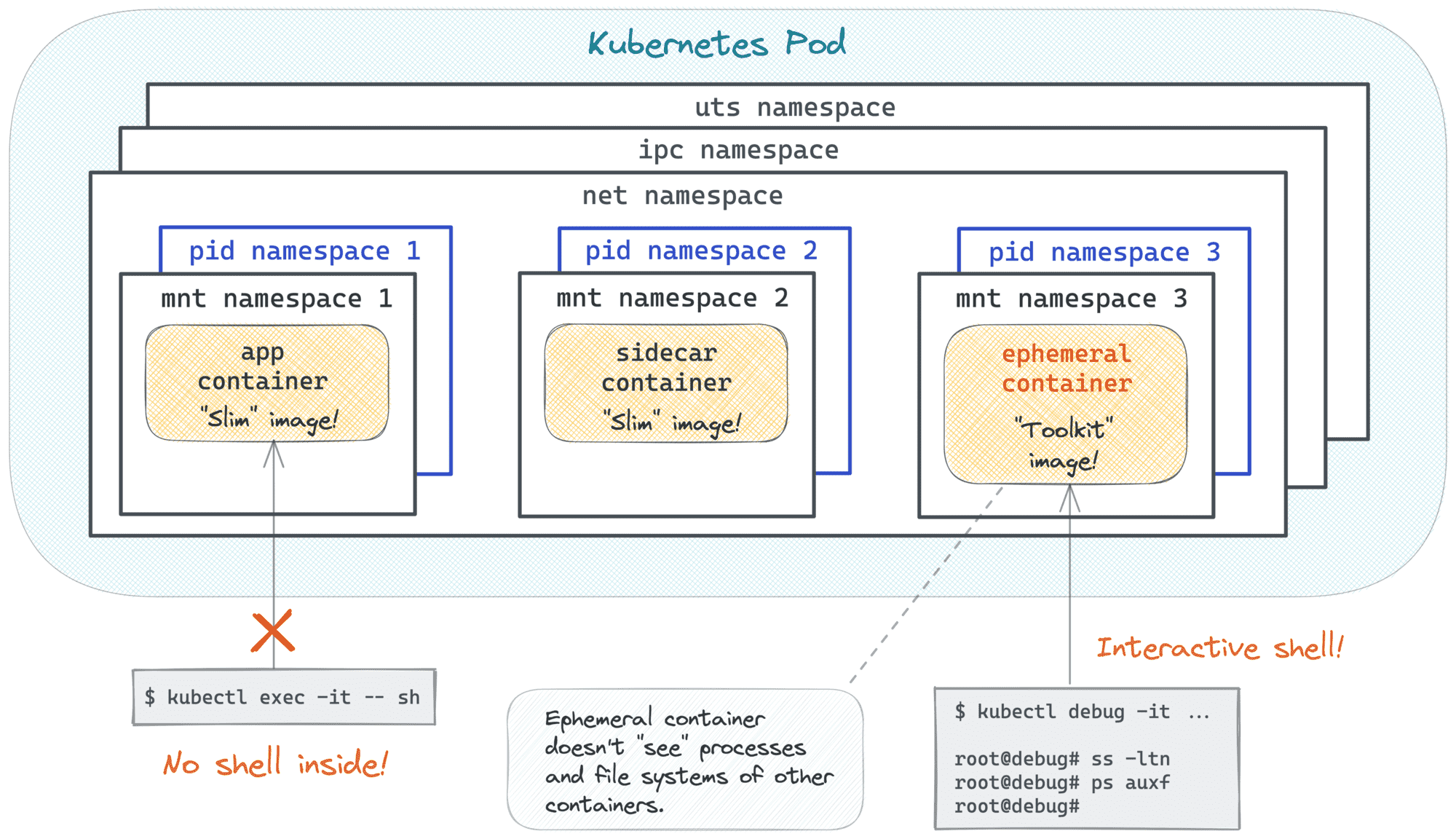

But there is actually a problem:

$# ps auxf

PID USER TIME COMMAND

1 root 0:00 sh

14 root 0:00 ps auxf

The ps output from inside of the debugger container shows only the processes of that container... So, kubectl debug just gave me a shared net (and probably ipc) namespace, likely the same parent cgroup as for the other Pod's containers, and that's pretty much it! Sounds way too limited for a seamless debugging experience 🤔

While troubleshooting a Pod, I'd typically want to see the processes of all its containers, as well as I'd be interested in exploring their filesystems. Can an ephemeral container be a little more unraveling?

Using kubectl debug with a shared pid namespace

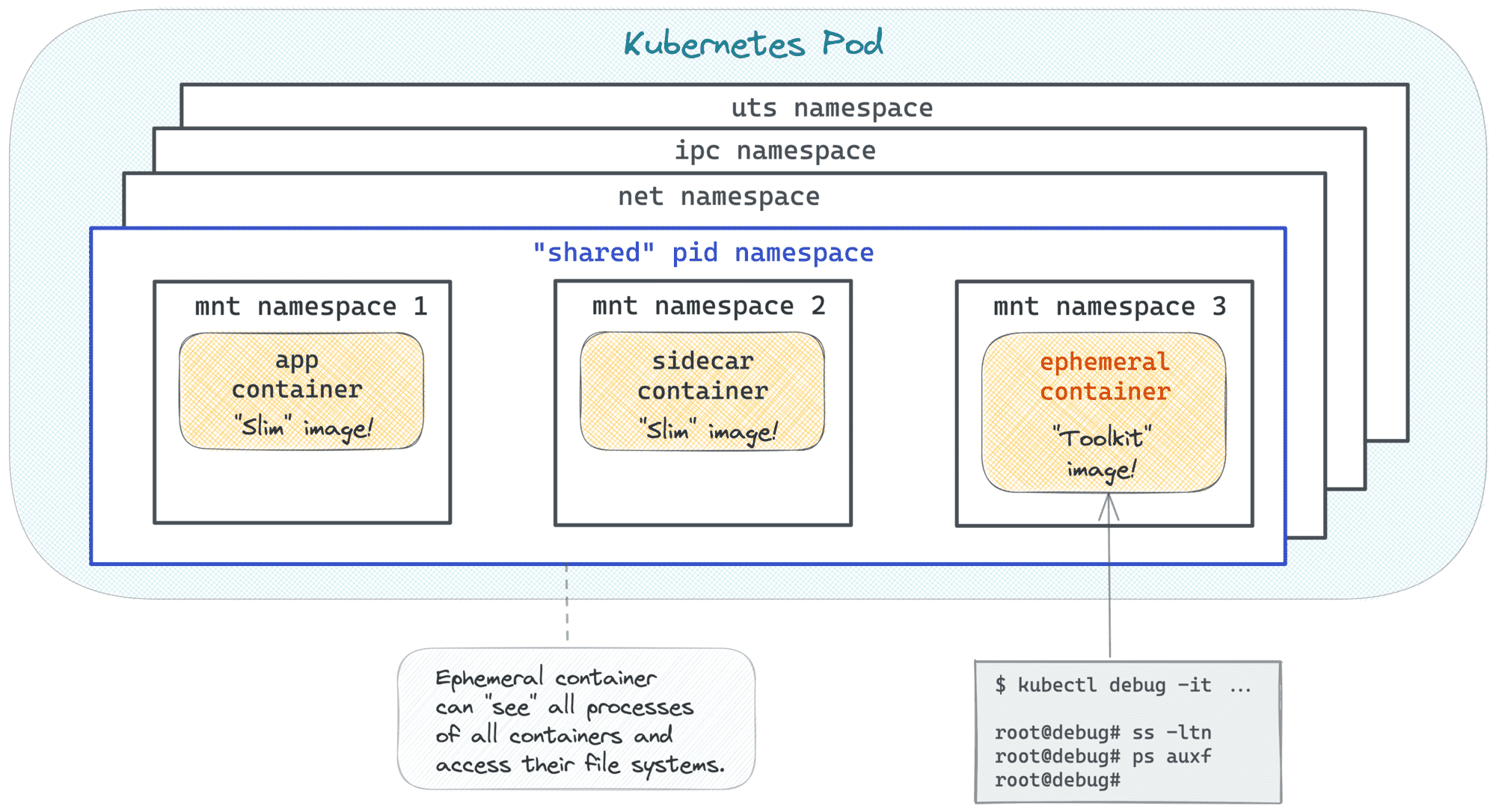

Interesting that the official documentation page mentions specifically this problem! It also offers a workaround - enabling a shared pid namespace for all the containers in a Pod.

It can be done by setting the shareProcessNamespace attribute in the Pod template spec to true:

$ kubectl patch deployment slim --patch '

spec:

template:

spec:

shareProcessNamespace: true'

Ok, let's try inspecting the Pod one more time:

$ kubectl debug -it -c debugger --image=busybox \

$(kubectl get pods -l app=slim -o jsonpath='{.items[0].metadata.name}')

$# # ps auxf

PID USER TIME COMMAND

1 65535 0:00 /pause

7 root 0:00 python -m http.server 8080

19 root 0:00 /nodejs/bin/node -e setTimeout(() => console.log("done"), 999999)

37 root 0:00 sh

49 root 0:00 ps auxf

Looks better! It feels much closer to debugging experience on a regular server where you'd see all the processes running in one place.

However, if you explore the filesystem with ls, you'll notice that it's a filesystem of the ephemeral container itself (busybox in our case), and not of any other container in the Pod. Well, it makes sense - containers in a Pod (typically) share net, ipc, and uts namespaces, they can share the pid namespace, but they never share the mnt namespace. Also, filesystems of different containers always stay independent. Otherwise, all sorts of collisions would start happening.

Nevertheless, while debugging, I want to have CRUD-like access to the misbehaving container filesystem! Luckily, there is a trick:

# From inside the ephemeral container:

$# ls /proc/$(pgrep python)/root/usr/bin

c_rehash getconf iconv locale openssl python python3.9 zdump

catchsegv getent ldd localedef pldd python3 tzselect

$# ls /proc/$(pgrep node)/root/nodejs

CHANGELOG.md LICENSE README.md bin include share

Thus, if you know a PID of a process from the container you want to explore, you can find its filesystem at /proc/<PID>/root from inside the debugging container.

Magic! 🪄✨

But all magic always comes with a price... Changing the shareProcessNamespace attribute actually cost us a rollout!

$ kubectl get replicasets -l app=slim

NAME DESIRED CURRENT READY AGE

slim-6d8c6578bc 1 1 1 119s

slim-79487d6484 0 0 0 7m57s

Much like most of the other Pod spec attributes, changing shareProcessNamespace leads to a Pod re-creation. And changing it on the Deployment's template spec level actually causes a full-blown rollout, which might be an unaffordable price for a happy debugging session. But even if you're fine with it, restarting Pods for debugging, in my eyes, defeats the whole purpose of having ephemeral containers capability - they were supposed to be injected without causing Pod disruption.

So, unless your workloads run with shareProcessNamespace enabled by default (which is unlikely a good idea since it reduces the isolation between containers), we still need a better solution.

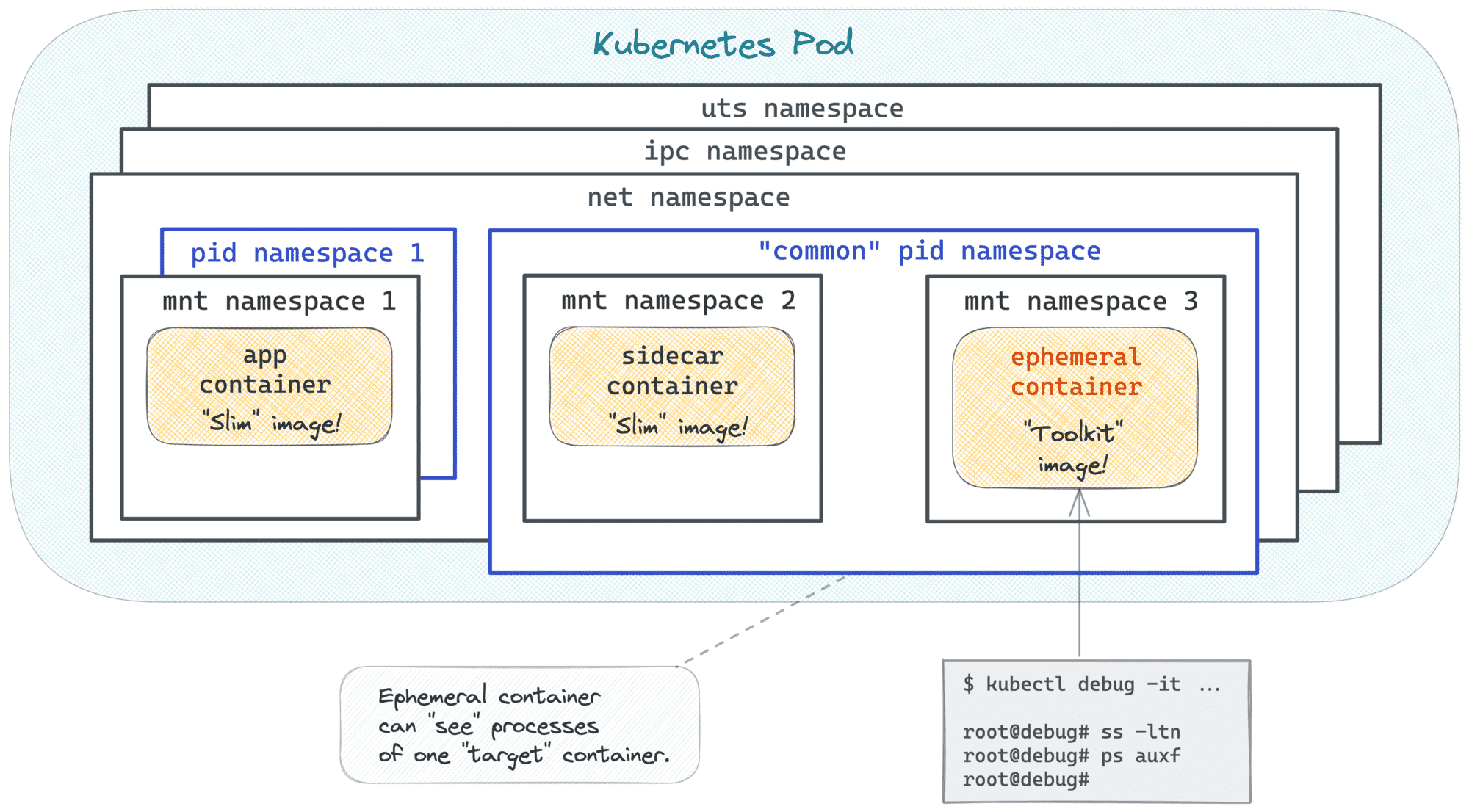

Using kubectl debug targeting a single container

One container can be only in one pid namespace at a time, so if the shared pid namespace wasn't activated from the very beginning (making all Pod's containers use the same pid namespaces), it wouldn't be possible to start an ephemeral container that sees all processes of all containers.

However, technically, it still should be possible to start a container that would share a pid namespace of a particular Pod's container!

👉 You can read more about the technique here.

Actually, the Kubernetes CRI Spec specifically mentions this possibility for Pods (overall, it's an insightful read, do recommend!):

// NamespaceOption provides options for Linux namespaces.

type NamespaceOption struct {

// Network namespace for this container/sandbox.

// Note: There is currently no way to set CONTAINER scoped network in the Kubernetes API.

// Namespaces currently set by the kubelet: POD, NODE

Network NamespaceMode `protobuf:"varint,1,opt,name=network,proto3,enum=runtime.v1.NamespaceMode" json:"network,omitempty"`

// PID namespace for this container/sandbox.

// Note: The CRI default is POD, but the v1.PodSpec default is CONTAINER.

// The kubelet's runtime manager will set this to CONTAINER explicitly for v1 pods.

// Namespaces currently set by the kubelet: POD, CONTAINER, NODE, TARGET

Pid NamespaceMode `protobuf:"varint,2,opt,name=pid,proto3,enum=runtime.v1.NamespaceMode" json:"pid,omitempty"`

// IPC namespace for this container/sandbox.

// Note: There is currently no way to set CONTAINER scoped IPC in the Kubernetes API.

// Namespaces currently set by the kubelet: POD, NODE

Ipc NamespaceMode `protobuf:"varint,3,opt,name=ipc,proto3,enum=runtime.v1.NamespaceMode" json:"ipc,omitempty"`

// Target Container ID for NamespaceMode of TARGET. This container must have been

// previously created in the same pod. It is not possible to specify different targets

// for each namespace.

TargetId string `protobuf:"bytes,4,opt,name=target_id,json=targetId,proto3" json:"target_id,omitempty"`

}

And indeed, a more thorough look at the kubectl debug --help output revealed the --target flag that I missed for some reason during my initial acquaintance with the command:

# Refresh the POD_NAME var since there was a rollout:

$ POD_NAME=$(kubectl get pods -l app=slim -o jsonpath='{.items[0].metadata.name}')

$ kubectl debug -it -c debugger --target=app --image=busybox ${POD_NAME}

Targeting container "app". If you don't see processes from this container

it may be because the container runtime doesn't support this feature.

$# ps auxf

PID USER TIME COMMAND

1 root 0:00 python -m http.server 8080

13 root 0:00 sh

25 root 0:00 ps auxf

Well, apparently my runtime (containerd + runc) does support this feature 😊

Using kubectl debug copying the target Pod

While targeting a specific container in a misbehaving Pod would probably be my favorite option, there is another kubectl debug mode that's worth covering.

Sometimes, it might be a good idea to copy a Pod before starting the debugging. Luckily, the kubectl debug command has a flag for that --copy-to <new-name>. The new Pod won't be owned by the original workload, nor will it inherit the labels of the original Pod, so it won't be targeted by a potential Service object in front of the workload. This should give you a quiet copy to investigate!

And since it is a copy, the shareProcessNamespace attribute can be set on it without causing any disruption for the production Pods. However, that would require another flag --share-processes:

$ kubectl debug -it -c debugger --image=busybox \

--copy-to test-pod \

--share-processes \

${POD_NAME}

# All processes are here!

$# ps auxf

PID USER TIME COMMAND

1 65535 0:00 /pause

7 root 0:00 python -m http.server 8080

19 root 0:00 /nodejs/bin/node -e setTimeout(() => console.log("done"), 999999)

37 root 0:00 sh

49 root 0:00 ps auxf

This is how the playground cluster would look like after the above command is applied to a fresh Deployment:

$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/slim-79487d6484-h4rss 2/2 Running 0 63s

pod/test-pod 3/3 Running 0 20s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 11d

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/slim 1/1 1 1 64s

NAME DESIRED CURRENT READY AGE

replicaset.apps/slim-79487d6484 1 1 1 64s

Bonus: Ephemeral Containers Without kubectl debug

It took me a while to figure out how to create an ephemeral container without using the kubectl debug command, so I'll share the recipe here.

💡 Why you may want to create an ephemeral container bypassing the kubectl debug command?

Because it gives you more control over the container's attributes. For instance, with kubectl debug, it's still not possible to create an ephemeral container with a volume mount, while on the API level it's doable.

Using kubectl debug -v 8 revealed that on the API level, ephemeral containers are added to a Pod by PATCH-ing its /ephemeralcontainers subresource. This article from 2019 suggests using kubectl replace --raw /api/v1/namespaces/<ns>/pods/<name>/ephemeralcontainers with a special EphemeralContainers manifests, but this technique doesn't seem to work in Kubernetes 1.23+.

Actually, I couldn't find a way to create an ephemeral container using any kubectl command (except debug, of course). So, here is an example of how to do it using a raw Kubernetes API request:

$ kubectl proxy

$ POD_NAME=$(kubectl get pods -l app=slim -o jsonpath='{.items[0].metadata.name}')

$ curl localhost:8001/api/v1/namespaces/default/pods/${POD_NAME}/ephemeralcontainers \

-XPATCH \

-H 'Content-Type: application/strategic-merge-patch+json' \

-d '

{

"spec":

{

"ephemeralContainers":

[

{

"name": "debugger",

"command": ["sh"],

"image": "busybox",

"targetContainerName": "app",

"stdin": true,

"tty": true,

"volumeMounts": [{

"mountPath": "/var/run/secrets/kubernetes.io/serviceaccount",

"name": "kube-api-access-qnhvv",

"readOnly": true

}]

}

]

}

}'

Conclusion

Using heavy container images for Kubernetes workloads is inefficient (we've all been through these CI/CD pipelines that take forever to finish) and insecure (the more stuff you have there, the higher are the chances to run into a nasty vulnerability). Thus, getting debugging tools into misbehaving Pods on the fly is a much-needed capability, and Kubernetes ephemeral containers do a great job bringing it to our clusters.

I definitely enjoyed playing with the revamped kubectl debug functionality. However, it clearly requires a significant amount of the low-level container (and Kubernetes) knowledge to be used efficiently. Otherwise, all sorts of surprising behavior will pop up, starting from the missing processes and ending with unexpected mass-restarts of the Pods.

Good luck!

Resources

- Kubernetes Documentation - Ephemeral Containers.

- Kubernetes Documentation - Debugging with an ephemeral debug container.

- Google Open Source Blog - Introducing Ephemeral Containers - interesting historical perspective.

- Ephemeral Containers — the future of Kubernetes workload debugging - an early take (2019).

- Using Kubernetes Ephemeral Containers for Troubleshooting - mentions the issue with the shared

pidnamespace.

Further reading

- Docker: How To Debug Distroless And Slim Containers

- Kubernetes Ephemeral Containers and kubectl debug Command

- Containers 101: attach vs. exec - what's the difference?

- Why and How to Use containerd From Command Line

- Docker: How To Extract Image Filesystem Without Running Any Containers

- KiND - How I Wasted a Day Loading Local Docker Images

Don't miss new posts in the series! Subscribe to the blog updates and get deep technical write-ups on Cloud Native topics direct into your inbox.