These mysterious HTTP 502s happened to me already twice over the past few years. Since the amount of service-to-service communications every year goes only up, I expect more and more people to experience the same issue. So, sharing it here.

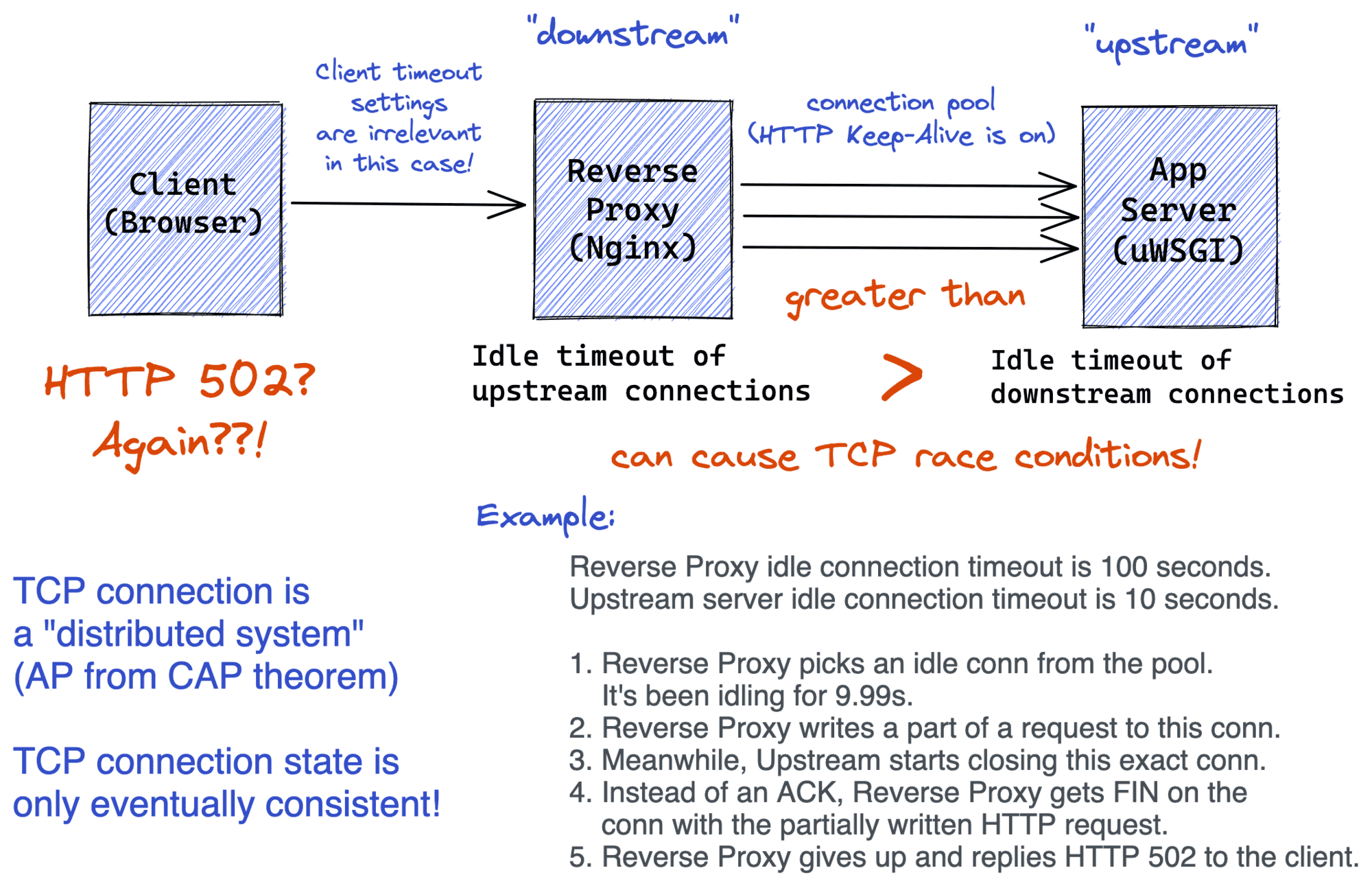

TL;DR: HTTP Keep-Alive between a reverse proxy and an upstream server combined with some misfortunate downstream- and upstream-side timeout settings can make clients receiving HTTP 502s from the proxy.

Level up your server-side game — join 10,000 engineers getting insightful learning materials straight to their inbox.

Below is a longer version...

HTTP 502 status code, also know as Bad Gateway indicates that a server, while acting as a gateway or proxy, received an invalid response from the upstream server. Typical problems causing it (summarized by Datadog folks):

- Upstream server isn't running.

- Upstream server times out.

- Reverse proxy is misconfigured (e.g., my favourite one - trying to call uWSGI over

HTTPwhile it listens onuwsgiprotocol 🙈).

However, sometimes there seems to be no apparent reason for HTTP 502s responses while clients sporadically see them:

- NodeJs application behind Amazon ELB throws 502

- Proxy persistent upstream: occasional 502

- Sporadic 502 response only when running through traefik

- HTTP 502 response generated by a proxy after it tries to send data upstream to a partially closed connection

For me personally, it happened twice already - the first time, with a Node.js application running behind AWS ALB; and the second time, with a Python (uWSGI) application running behind Traefik reverse proxy. And it took quite some time for my team to debug it back in the day (we even involved premium AWS support to pin down the root cause).

Long story short, it was a TCP race condition.

A detailed explanation of the problem, including some low-level TCP packet analysis, can be found in this lovely article. And here, I'll just try to give a quick summary.

First off, any TCP connection is a distributed system. Well, it should make sense - the actual state of a connection is distributed between its [remote] endpoints. And as the CAP theorem dictates, any distributed system can be either available 100% of the time or consistent 100% of the time, but not both. TCP obviously chooses the availability. Hence, the state of a TCP connection is only eventually consistent!

Why does it matter for us?

While this serverfault answer says that the HTTP Keep-Alive should be used only for the client-to-proxy communications, from my experience, proxies often keep the upstream connections alive too. This is a so-called connection pool pattern when just a few connections are heavily reused to handle numerous requests.

When a connection stays in the pool for too long without being reused, it's marked as idle. Usually, idle connections are closed after some period of inactivity. However, the server-side (i.e., the upstream) can also have a notion of idle connections. And sometimes, the proxy and the upstream may choose a rather misfortunate combination of connection timeout settings. If the proxy and the upstream have exactly the same idle timeout durations or the upstream drops connection sooner than the proxy, the end clients start experiencing HTTP 502s.

While one endpoint (the proxy) can still be thinking that the connection is totally fine, the other endpoint may have already started closing the connection (the upstream).

So, the proxy starts writing a request into a connection that is being closed by the upstream. And instead of getting the TCP ACK for the sent bytes, the proxy gets TCP FIN (or even RST) from the upstream. Oftentimes, the proxy just gives up on such requests and responds with immediate HTTP 502 to the client. And this is completely invisible on the application side! So, no application logs will be helpful in such a case.

HTTP Keep-Alive can cause TCP race conditions 🤯

— Ivan Velichko (@iximiuz) September 29, 2021

Ok, it happened again... Hence, the diagram!

In a (reverse_proxy + app_server) setup, the reverse proxy should be dropping connections more frequently than the app server. Otherwise, clients will see sporadic HTTP 502s.

Why? 🔽 pic.twitter.com/YBb2tP3Zfg

The problem is actually quite generic. Theoretically, it can happen in any point-to-point TCP communication where the connections are reused if the upstream drops connections faster than the downstream. However, most of the time, the problem manifests itself with a reverse proxy talking to an upstream with HTTP Keep-Alive being on.

How to mitigate:

- Make sure the upstream (application) idle connection timeout is longer than the downstream (proxy) connection timeout.

- Disable connection reuse between the upstream and the proxy (turn off HTTP Keep-Alive).

- Employ HTTP 5xx retries on the client-side (well, they are often a must-have anyway).

Level up your server-side game — join 10,000 engineers getting insightful learning materials straight to their inbox: