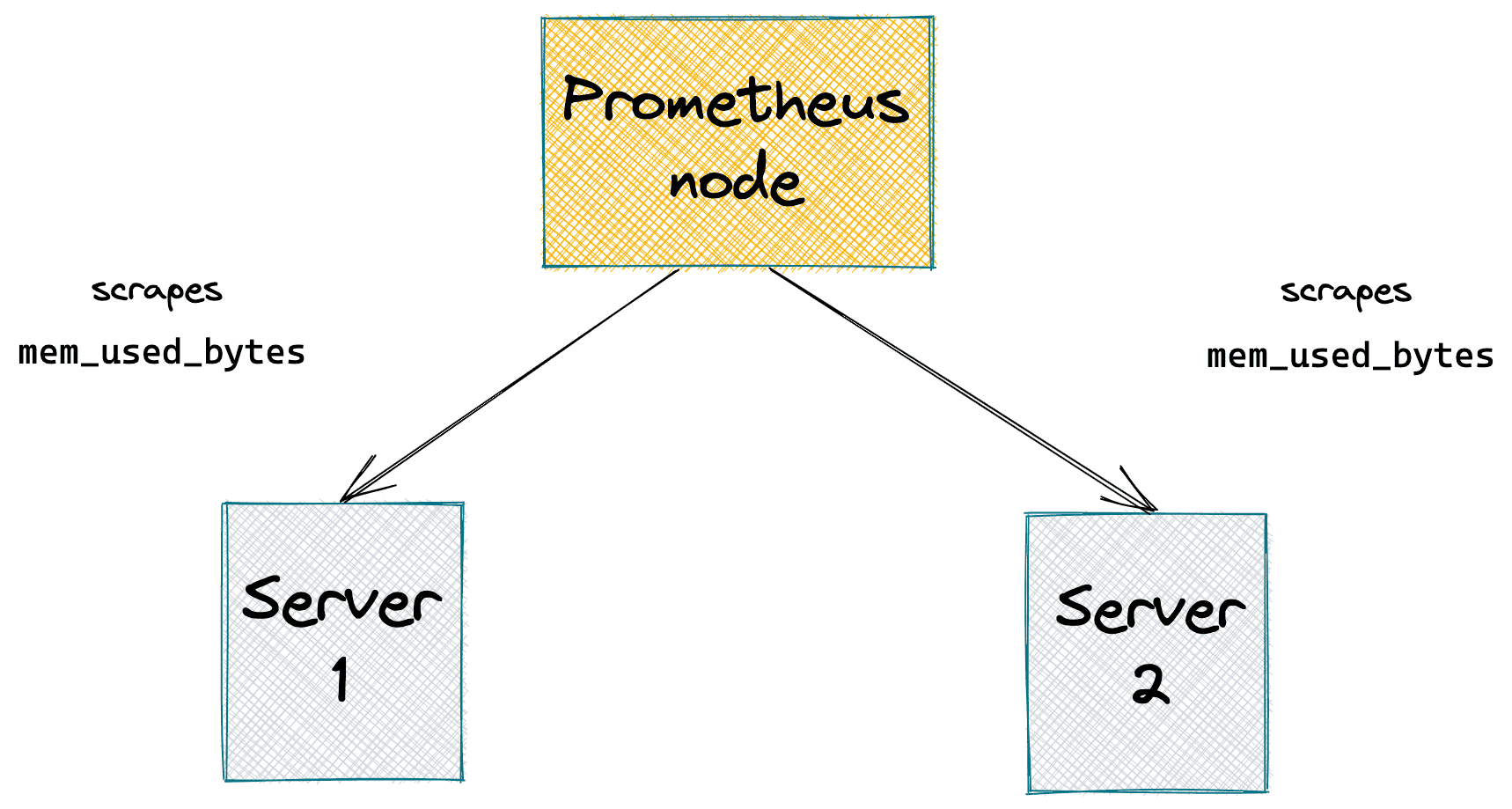

When you have a long series of numbers, such as server memory consumption scraped 10-secondly, it's a natural desire to derive another, probably more meaningful series from it, by applying a moving window function. For instance, moving average or moving quantile can give you much more readable results by smoothing some spikes.

Prometheus has a bunch of functions called <smth>_over_time(). They can be applied only to range vectors. It essentially makes them window aggregation functions. Every such function takes in a range vector and produces an instant vector with elements being per-series aggregations.

For people like me who normally grasp code faster than text, here is some pseudocode of the aggregation logic:

# Input vector example.

range_vector = [

({"lab1": "val1", "lab2": "val2"}, [(12, 1624722138), (11, 1624722148), (17, 1624722158)]),

({"lab1": "val1", "lab2": "val2"}, [(14, 1624722138), (10, 1624722148), (13, 1624722158)]),

({"lab1": "val1", "lab2": "val2"}, [(16, 1624722138), (12, 1624722148), (15, 1624722158)]),

({"lab1": "val1", "lab2": "val2"}, [(12, 1624722138), (17, 1624722148), (18, 1624722158)]),

]

# agg_func examples: `sum`, `min`, `max`, `avg`, `last`, etc.

def agg_over_time(range_vector, agg_func, timestamp):

# The future instant vector.

instant_vector = {"timestamp": timestamp, "elements": []}

for (labels, samples) in range_vector:

# Every instant vector element is

# an aggregation of multiple samples.

sample = agg_func(samples)

instant_vector["elements"].append((labels, sample))

# Notice, that the timestamp of the resulting instant vector

# is the timestamp of the query execution. I.e., it may not

# match any of the timestamps in the input range vector.

return instant_vector

Read more