- Not Every Container Has an Operating System Inside

- You Don't Need an Image To Run a Container

- You Need Containers To Build Images

- Containers Aren't Linux Processes

- From Docker Container to Bootable Linux Disk Image

Don't miss new posts in the series! Subscribe to the blog updates and get deep technical write-ups on Cloud Native topics direct into your inbox.

TL;DR Per OCI Runtime Specification:

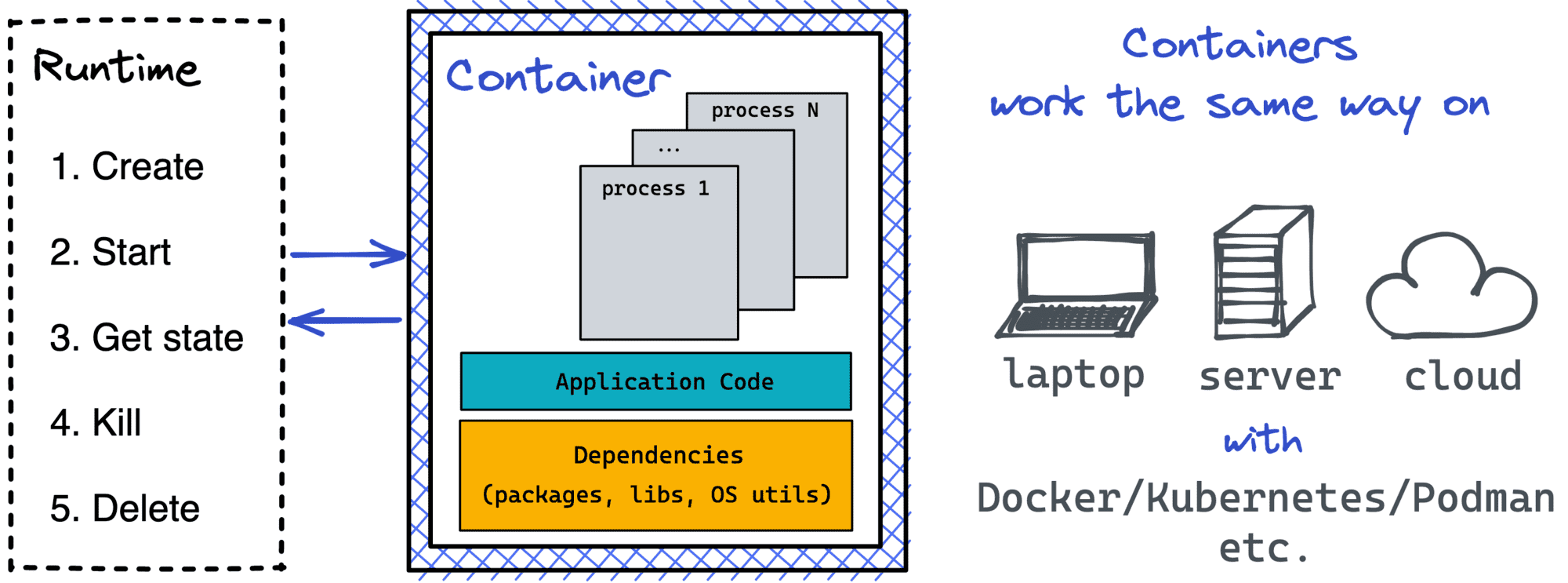

- Containers are isolated and restricted boxes for running processes 📦

- Containers pack an app and all its dependencies (including OS libs) together

- Containers are for portability - any compliant runtime can run a standard

imagebundle - Containers can be implemented using Linux, Windows, and other OS-es

- Virtual Machines also can be used as standard containers 🤐

There are many ways to create containers, especially on Linux and alike. Besides the super widespread Docker implementation, you may have heard about LXC, systemd-nspawn, or maybe even OpenVZ.

The general concept of the container is quite vague. What's true and what's not often depends on the context, but the context itself isn't always given explicitly. For instance, there is a common saying that containers are Linux processes or that containers aren't Virtual Machines. However, the first statement is just an oversimplified attempt to explain Linux containers. And the second statement simply isn't always true.

In this article, I'm not trying to review all possible ways of creating containers. Instead, the article is an analysis of the OCI Runtime Specification. The spec turned out to be an insightful read! For instance, it gives a definition of the standard container (and no, it's not a process) and sheds some light on when Virtual Machines can be considered containers.

Containers, as they brought to us by Docker and Podman, are OCI-compliant. Today we even use the terms container, Docker container, and Linux container interchangeably. However, this is just one type of OCI-compliant container. So, let's take a closer look at the OCI Runtime Specification.

What is Open Container Initiative (OCI)

Open Container Initiative (OCI) is an open governance structure that was established in 2015 by Docker and other prominent players of the container industry to express the purpose of creating open industry standards around container formats and runtimes. In other words, OCI develops specifications for standards on Operating System process and application containers.

Also think it's too many fancy words for such a small paragraph?

Here is how I understand it. By 2015 Docker already gained quite some popularity, but there were other competing projects implementing their own containers like rkt and lmctfy. Apparently, the OCI was established to standardize the way of doing containers. De facto, it made the Docker's container implementation a standard one, but some non-Docker parts were incorporated too.

What is an OCI Container

So, how does OCI define a Container nowadays?

A Standard Container is an environment for executing processes with configurable isolation and resource limitations.

Why do we even need containers?

[To] define a unit of software delivery ... The goal of a Standard Container is to encapsulate a software component and all its dependencies in a format that is self-describing and portable, so that any compliant runtime can run it without extra dependencies, regardless of the underlying machine and the contents of the container.

Ok, and what can we do with containers?

[Containers] can be created, started, and stopped using standard container tools; copied and snapshotted using standard filesystem tools; and downloaded and uploaded using standard network tools.

Operations on containers that OCI runtimes must support: Create, Start, Kill, Delete, and Query State.

Well, makes sense. But... a container cannot be a process then! According to the OCI Runtime Spec, it's more like an isolated and restricted box for running one or more processes inside.

Linux Container vs. Other Containers

Apart from the container's operations and lifecycle, the OCI Runtime Spec also specifies the container's configuration and execution environment.

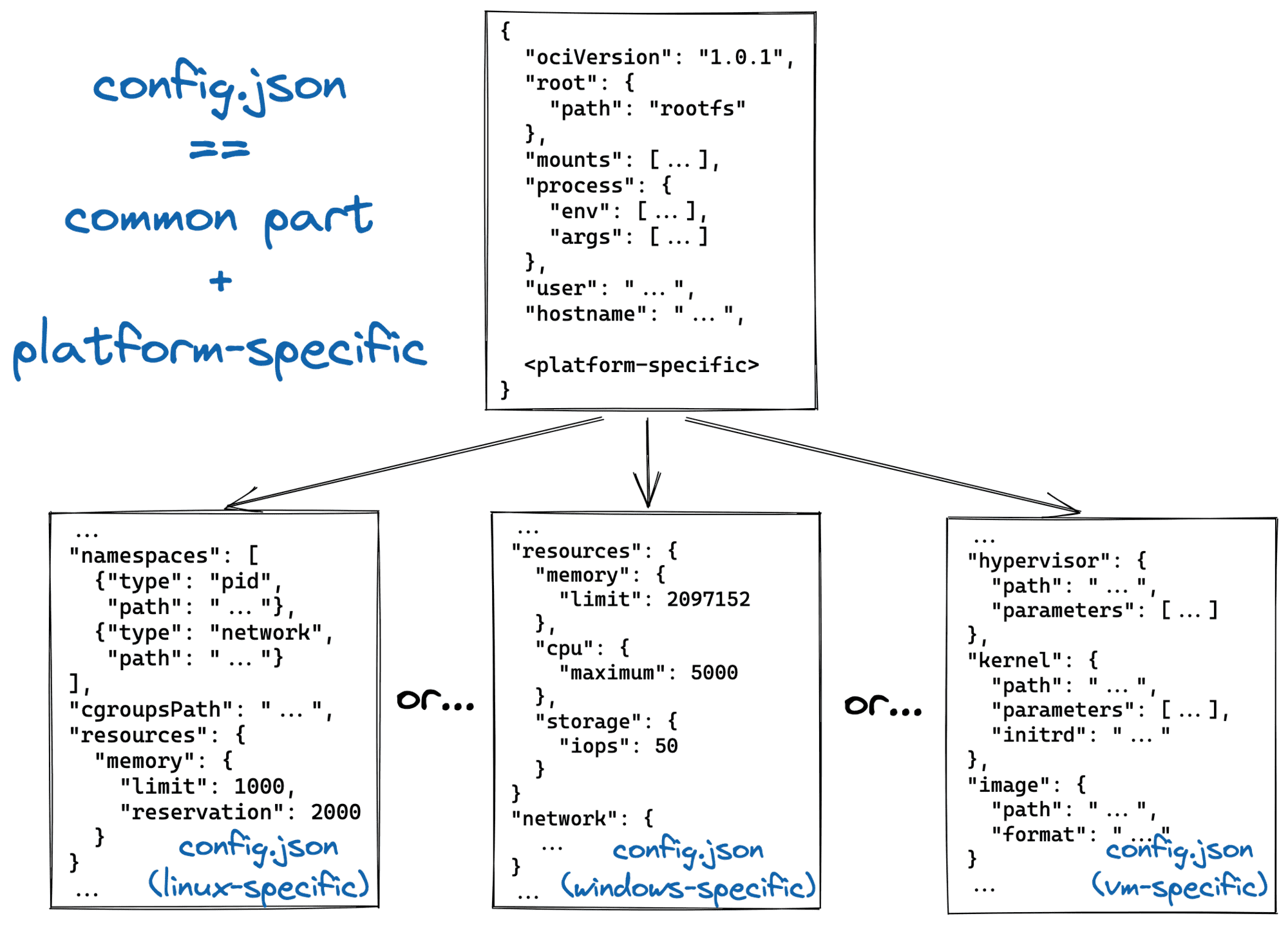

Per the OCI Runtime Spec, to create a container, one needs to provide a runtime with a so-called filesystem bundle that consists of a mandatory config.json file and an optional folder holding the future container's root filesystem.

Off-topic: A bundle is usually obtained by unpacking a container image, but images aren't a part of the Runtime Spec. Instead, they are subject to the dedicated OCI Image Specification.

config.json contains data necessary to implement standard operations against the container (Create, Start, Query State, Kill, and Delete). But things start getting really interesting when it comes to the actual structure of the config.json file.

The configuration consists of the common and platform-specific sections. The common section includes ociVersion, root filesystem path within the bundle, additional mounts beyond the root, a process to start in the container, a user, and a hostname. Hm... but where are the famous namespaces and cgroups?

By the time of writing this article, OCI Runtime Spec defines containers for the following platforms: Linux, Solaris, Windows, z/OS, and Virtual Machine.

Wait, what?! VMs are Containers??! 🤯

In particular, the Linux-specific section brings in (among other things) pid, network, mount, ipc, uts, and user namespaces, control groups, and seccomp. In contrast, the Windows-specific section comes with its own isolation and restriction mechanisms provided by the Windows Host Compute Service (HCS).

Thus, only Linux containers rely on namespaces and cgroups. However, not all standard containers are Linux.

Virtual Machines vs. Containers

The most widely-used OCI runtimes are runc and crun. Unsurprisingly, both implement Linux containers. But as we just saw, OCI Runtime Spec mentions Windows, Solaris, and other containers. And what's even more intriguing for me, it defines VM containers!

Aren't containers were meant to replace VMs as a more lightweight implementation of the same execution environment abstraction?

Anyways, let's take a closer look at VM containers.

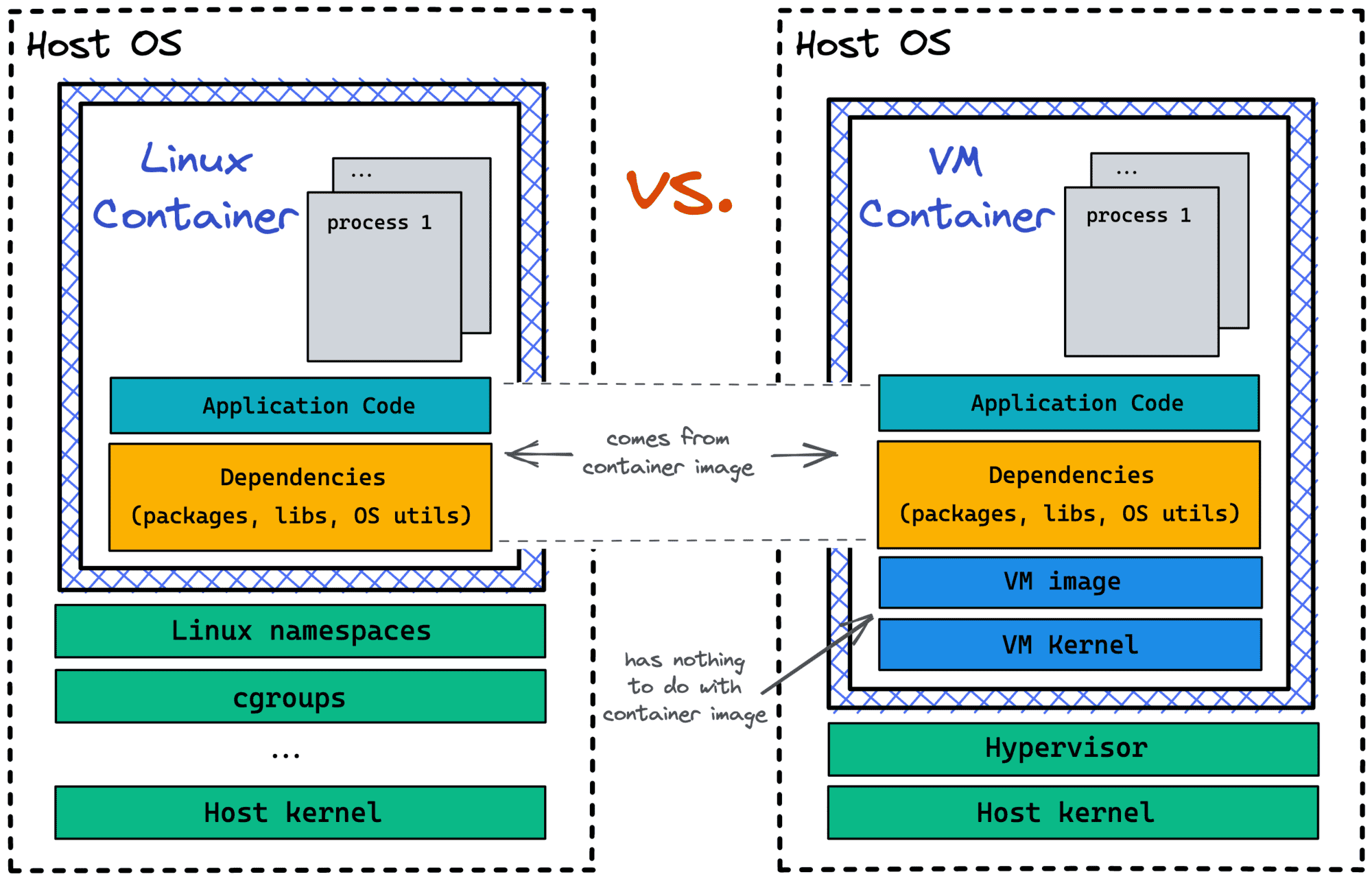

Clearly, they are not backed by Linux namespaces and cgroups. Instead, the Virtual-machine-specific Container Configuration mentions a hypervisor, a kernel, and a VM image. So, the isolation is achieved by virtualizing some hardware (hypervisor) and then booting a full-fledged OS (kernel + image) on top of it. The resulting environment is our box, i.e., a container.

Notice that the VM image mentioned by the OCI Runtime Spec has nothing to do with the traditional container image that is used to create a bundle. The bundle root filesystem is mounted into a VM container separately.

Thus, VM-based containers is a thing!

However, the only non-deprecated implementation of OCI VM containers, Kata containers, has the following in its FAQ:

Kata Containers is still in its formational stages, but the technical basis for the project - Clear Containers and runV - are used globally at enterprise scale by organizations like JD.com, China's largest ecommerce company (by revenue).

That is, the good old Linux containers remain the default production choice. So, containers are still just [boxed] processes.

UPD: Adel Zaalouk (@ZaNetworker) kindly pointed me to the OpenShift Sandboxed Containers project. It's an attempt to make Kubernetes Open Shift workloads more secure. Long story short, it uses Kata Containers to run Kubernetes Open Shift Pods inside lightweight Virtual Machines. And it's already in the technology preview mode. Here is a nice intro and the coolest diagram ever showing how in great detail (and its interactive counterpart). Do have this addition in mind while reading the following section :)

MicroVMs vs. Containers

One of the coolest parts of Linux containers is that they are much more lightweight than Virtual Machines. The startup time is under a second, and there is almost no space and runtime overhead. However, their strongest part is their weakness as well. Linux containers are so fast because they are regular processes. So, they are as secure as the underlying Linux host. Thus, Linux containers are only good for trusted workloads.

Since shared infrastructure becomes more and more common, the need for stronger isolation remains. Serverless/FaaS computing is probably one of the most prominent examples. By running code in AWS Lambda or alike, you just don't deal with the server abstraction anymore. Hence, there is no need for virtual machines or containers for development teams. But from the platform provider standpoint, using Linux containers to run workloads of different customers on the same host would be a security nightmare. Instead, functions need to be run in something as lightweight as Linux containers and as secure as Virtual Machines.

AWS Firecracker to the rescue!

The main component of Firecracker is a virtual machine monitor (VMM) that uses the Linux Kernel Virtual Machine (KVM) to create and run microVMs. Firecracker has a minimalist design. It excludes unnecessary devices and guest-facing functionality to reduce the memory footprint and attack surface area of each microVM. This improves security, decreases the startup time, and increases hardware utilization. Firecracker has also been integrated in container runtimes, for example Kata Containers and Weaveworks Ignite.

But surprisingly or not, Firecracker is not OCI-compliant runtime on itself... However, there seems to be a way to put an OCI runtime into a Firecracker microVM and get the best of all worlds - portability of containers, lightness of Firecracker microVMs, and full isolation from the host operating system. I'm definitely going to have a closer look at this option and I'll share my finding in the future posts.

UPD: Check out this awesome drawing showing how Kata Containers does it. In particular, Kata Containers can use Firecracker as VMM.

Another interesting project in the area of secure containers is Google's gVisor:

gVisor is an application kernel, written in Go, that implements a substantial portion of the Linux system surface. It includes an Open Container Initiative (OCI) runtime called runsc that provides an isolation boundary between the application and the host kernel. The runsc runtime integrates with Docker and Kubernetes, making it simple to run sandboxed containers.

Unlike Firecracker, gVisor provides an OCI-complaint runtime. But there is no full-fledged hypervisor like KVM for gVisor-backed containers. Instead, it emulates the kernel in the user-space. Sounds pretty cool, but the runtime overhead is likely to be noticeable.

Instead of conclusion

To summarize, containers aren't just slightly more isolated and restricted Linux processes. Instead, they are standardized execution environments improving workload portability. Linux containers are the most widespread form of containers nowadays, but the need for more secure containers is growing. The OCI Runtime Spec defines the VM-backed containers, and the Kata project makes them real. So, it's an exciting time to explore the containerverse!

- Not Every Container Has an Operating System Inside

- You Don't Need an Image To Run a Container

- You Need Containers To Build Images

- Containers Aren't Linux Processes

- From Docker Container to Bootable Linux Disk Image

Don't miss new posts in the series! Subscribe to the blog updates and get deep technical write-ups on Cloud Native topics direct into your inbox.